Cloud computing is a wonderful thing, whether you are a business or a consumer. It isn’t the answer to everything, but it’s certainly solved some common problems, not least of which is the issue of back-ups. These days for a few dollars per month everybody can transparently back-up most if not all their important files to servers on the Internet and have those files synchronised between multiple computers and mobile devices such as smartphones and tablets.

There’s also no shortage of companies willing to offer their cloud storage services. Some services, like Amazon’s S3 service, are geared towards developers for integration into software (although Amazon now have a consumer offering), but there are many aimed at consumers who want a simple way of achieving transparent backup of their personal files. Microsoft, Symantec and Google all offer solutions, although not all are cross-platform.

Google Drive

Up until last week I used Google Drive, having taken up the service since it was launched earlier in the year. It costs $4.99 per month for 100Gb of storage and comes with software which you install on your computer and it automatically manages the sychronisation of your files, so long as you save them in the special “Google Drive” directory.

Up until last week I used Google Drive, having taken up the service since it was launched earlier in the year. It costs $4.99 per month for 100Gb of storage and comes with software which you install on your computer and it automatically manages the sychronisation of your files, so long as you save them in the special “Google Drive” directory.

However, Google Drive was not without its problems from the very start. The software is not particularly well written and it is apparent that it has some bugs. It suffers from massive memory management problems and is prone to crashing without warning. This was especially annoying during my initial upload of files, which would have taken around a week if the software had remained running, but it did not and it would quit every few hours. Because I was either not awake or not at home to keep restarting it each time it crashed, my initial upload took far longer.

But it got there in the end, and for around six months it successfully kept my files safe and sychronised between my computers. I still had the memory issues (it typically used between 700Mb and 1Gb of RAM even when idle), and so I often found myself having to quit the software in order to free up some RAM if I needed it. This wasn’t ideal as it meant that I had to remember to restart Google Drive in order to ensure my files were kept up to date, but I lived with it.

Restoration test

Then, at the end of November, came a real test of the value of Google Drive. The hard disk in my desktop Mac Mini developed unrecoverable hardware problems, and I had to replace it. Although this was a time-consuming process it was not a disaster for me as I had all my important data in one cloud service or another. I have all my music on iTunes Match, all my development work on Github and all other files that I would be upset about losing in Google Drive. I have other files that aren’t on any cloud service stored on an external hard drive; these are files that could be replaced relatively easily if I had to and it’s not worth backing them up.

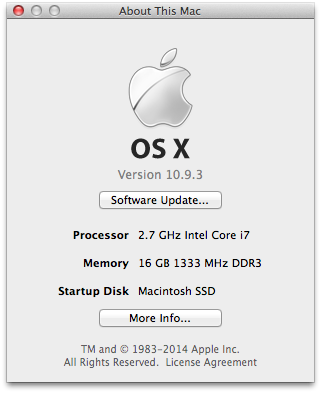

So I merrily removed the old hard disk without attempting to remove any of my data from it and installed the new one in its place (putty knives and swearing is always involved when upgrading an old-shape Mac Mini). I installed the operating system from scratch and all my software on the new hard disk and then began the process of restoring my data from the various cloud services. Github and iTunes Match worked like a charm straight off the bat, but Google Drive was, unfortunately, an entirely different story.

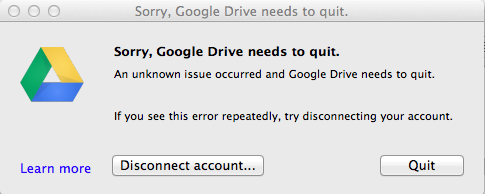

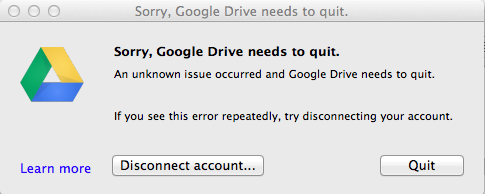

I installed the latest version of the software and entered my Google account details. It thought about it for a bit, allocated itself a whopping 3.25Gb of RAM, and then started to download my files. “OK”, I thought, “the RAM thing is even more annoying than it was before, but whatever”, and left it to do its thing. After downloading around 700Mb, it displayed a window saying that “An unknown issue occurred and Google Drive needs to quit“. The window also said that if this happens repeatedly I should disconnect my account.

It did this seven further times. Each time I was able to download around 100Mb of data before it displayed this error again. After the seventh time it didn’t download any more data, no matter how many more times I ran it. It had only downloaded 1.3Gb of my 55Gb of data. So I tried disconnecting my account and logging-in again. It insisted on starting the download from scratch, forcing me to discard the 1.3Gb already downloaded. Unfortunately it did exactly the same thing, repeated errors and then “maxing-out” at around 1.3Gb of files after numerous restarts. It was, frankly, ridiculous.

Out of frustration I called upon Google’s support, which as a paying customer I was entitled to. Their suggestion was to uninstall and re-install the software, and this suggestion came 48 hours later. Needless to say I was not particularly impressed. I did not believe for a second that this would fix the problem and that I was simply being taken through a standard support script. This was the final straw with Google Drive, after all the upload issues, memory issues and now this, an apparent inability to restore from my precious backup when I needed to.

I am 99% sure that it was crashing due to poor memory management (i.e. it was running out of memory), if the console messages were anything to go by. I considered that following their reinstallation advice would be a waste of my time based on this and I would further waste my time attempting to explain my technical suspicions to them. I needed my files back and I needed my cloud service back, on my timescale and not on Google’s.

Dropbox

I am fortunate to own two computers, and this was my saving grace. I still had the copy of the Google Drive directory on my other computer, so I still had a local and up to date copy of all my files. If, however, I had only one computer, I would have been entirely at the mercy of Google to get my files back. That was not something that I decided I was comfortable with and so I decided I had two choices:

- Persevere with Google’s support and, assuming they manage to fix the issue, continue to tolerate their piss-poor software going forward.

- Use the other copy of my files I had, find an alternative cloud storage service, upload them to it, and dump Google Drive.

I chose the latter. I had heard good things about Dropbox. They are a small firm for whom online storage is their entire business, rather than just another product, which is the case for Google. It is absolutely in their interest to get their offering right, because if they don’t they don’t have a dominant global search engine business (for example) to fall back upon. I wouldn’t be surprised if Google Drive grew half-arsed out of project that a Google developer created on his “do your own thing” day of the week, a privilege extended to Google developers as standard, to the envy of most others.

I chose the latter. I had heard good things about Dropbox. They are a small firm for whom online storage is their entire business, rather than just another product, which is the case for Google. It is absolutely in their interest to get their offering right, because if they don’t they don’t have a dominant global search engine business (for example) to fall back upon. I wouldn’t be surprised if Google Drive grew half-arsed out of project that a Google developer created on his “do your own thing” day of the week, a privilege extended to Google developers as standard, to the envy of most others.

Dropbox is twice the price of Google Drive, costing $9.99 per month for 100Gb instead of $4.99. This isn’t a high price to pay for a reliable solution in my opinion. Like Google Drive, it too comes with software to be installed on your computer(s) which creates a special directory into which you save your files and it sits there in the background and uploads and downloads files as required. The difference between the Dropbox software and the Google Drive software is that the Dropbox software does so without using all your RAM and without quitting every few hours. Amazeballs!

It took around 7 days to upload my files to Dropbox, during which the software did not crash even once and used no more than 400Mb of RAM at its peak. Google Drive’s memory management was so poor that it never released memory if it didn’t need it any more; its RAM usage just kept going up and up and up. I was supremely impressed with this; this is how Google Drive should have been from the very beginning and the fact that Dropbox can do it means there is no excuse for Google Drive not to be able to. I am currently in the process of downloading these newly-uploaded files to my other computer en-masse, and guess what, still no crashes and it doesn’t seem to think that downloading 55Gb is a somehow insurmountable task, so doesn’t give up after the first 1.3Gb.

Other things I like about Dropbox:

- Great mobile app for iPhone and and iPad. This, too, Just Works, and allows viewing of a wide range of file types. It also backs up the camera photos from each device, which is a nice touch.

- It has an API, which allows it to be integrated into other software and services, such as IFTTT. This is more exciting for me than it probably would be for most people, but it’s something that Google Drive doesn’t have.

Of course, Dropbox may well not be without its own problems which are not yet apparent. If any transpire I will of course report on them, but initial tests and use of the service is very promising, and certainly far better than comparable early days with Google Drive.

So there you are. If you’re looking for advice on which cloud backup service to use, I recommend Dropbox. It’s compatible with Mac OS, Linux, Microsoft Windows, iOS (iPhone, iPad) and Android. Enjoy.